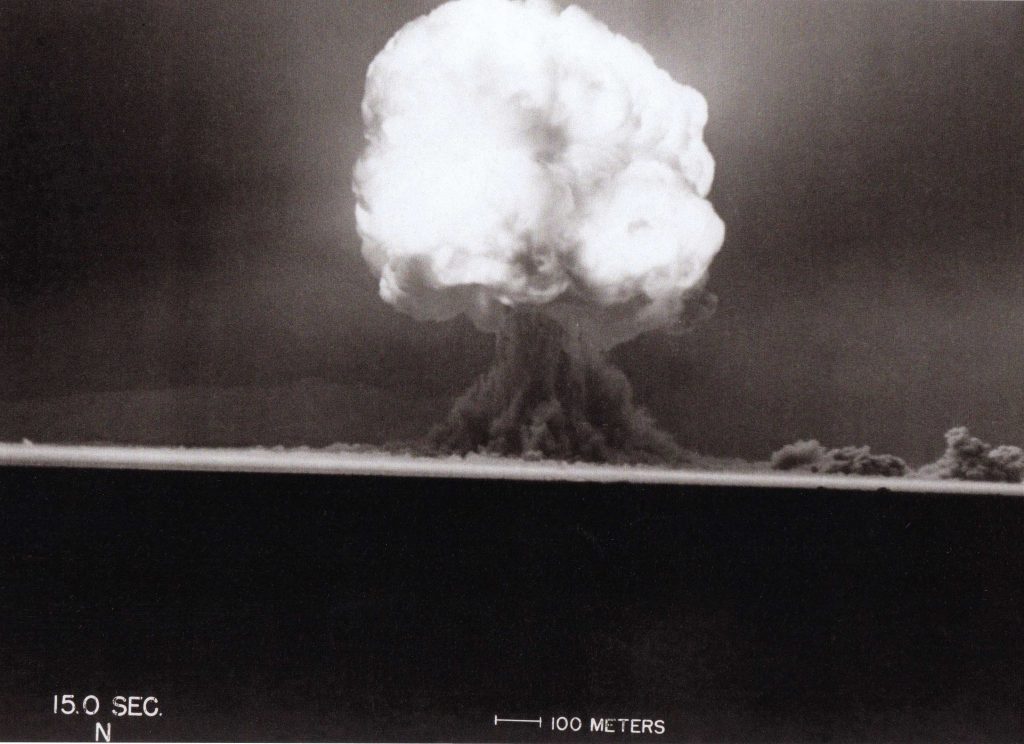

The Trinity test, 15 seconds after detonation

Recently, I’ve been thinking a lot about the Manhattan Project (probably since I just watched the new Oppenheimer movie) and the inevitability of the atomic bomb. This bomb was going to get built no matter what. If the physics exists, someone was going to make the bomb eventually. No doubt about it. The reason this part lingers with me, I think, is due to the paralells that one can draw here between physics and intelligence sciences (AI, neuroscience, psych, etc). Addictive software that harvests information for social control already exists. This data can easily be exploited in a myriad of ways using AI to cause harm for political or financial gain. We have all the uranium we need; the atom just needs splitting.

The reason the Americans ended up making the atomic bomb was because it benefited them so greatly to have such a bomb before everyone else. More importantly, they were the ones with the power to mine the uranium and throw the money at researchers, not because they would do it the best. Similarly, the corporations with all the data at their disposal and money to throw at researchers are going to be first to build the AI of our future, and they’ll build an AI that will benefit themselves the most ¿And what increases the sales of Meta (Facebook), Tiktok, Apple, Microsoft, etc. more than addiction, manipulation, and misinformation?

Thus, in our current age of information overload, this bomb will not be an explosive. In fact, when the bomb goes off, you will not see nor hear it. Personalized ads generated by an AI trained on what kind of posts trigger your impulsivity, so good at disguising itself that it blends seamlessly with every other post that came before it. Phishing accounts trained on befriending and coercing victims. Preying on those with a desire to maintain online friends. A country invades a neighboring state. Then, floods the internet with AI-controlled puppet accounts, claiming they’re rightfully reclaiming their homeland, citing some AI-generated historical records. They don’t even have to be all that convincing to be effective, just plausible enough to plant that seed of doubt.

AI, like any other tool, can do nothing on its own. Car Rolls Down Hill and Kills 4, but you parked your car on a hill with no emergency brake. The biggest threat is not AI itself. AI will become the most revolutionary technology in our lifetime. The only threat AI impose on us is that of its wielder.

How can we Safely Regulate the Creation of AI?

Now, no laws can effectively stop the research behind a weapon of war. What informing the public and passing laws can do is stop these weapons from being built right under our noses. Throwing a wrench into whatever Tiktok or Meta are planning to do next.

¿So, what should be done to ensure safety in the AI space? I, obviously, don’t have an answer to this question. It would be like asking someone outside of the Manhattan Project how to regulate nuclear physics such that we wouldn’t build a bomb. I can only share a couple of ideas that I’ve landed on while reading about this topic.

Disclosed to the user.

For AI to ethically be used in software, its usage must be disclosed to the user and be given the ability to opt-out in some manner if necessary. While this is especially true in social media feed-like services, I believe this should extend to most, if not all, uses of AI. If my input is visible to a program that can or has learned, it should be disclosed to me how it is used.

Developmental Restrictions

The engineering of AI cannot be done in the same manner that software is engineered. Generic use AI can quickly become dangerous, especially if it’s calibrated for a different task. Not to mention, if its values are set by a megacorporation, your application is riddled with biases from the top down; just look at ChatGBT, Bing, Bard, etc. Alternatively, I would love to see more tools that train AI (this would also help alleviate the dependency many developers have on companies like OpenAI).

None of this is effective without education of course. As we stand at the cusp of our AI revolution, the innovation will happen no matter what. What needs to be done is the education of the masses on AI usage and safety. People must understand what is and isn’t shady work, otherwise we end up with another failure in need of fixing like social media.